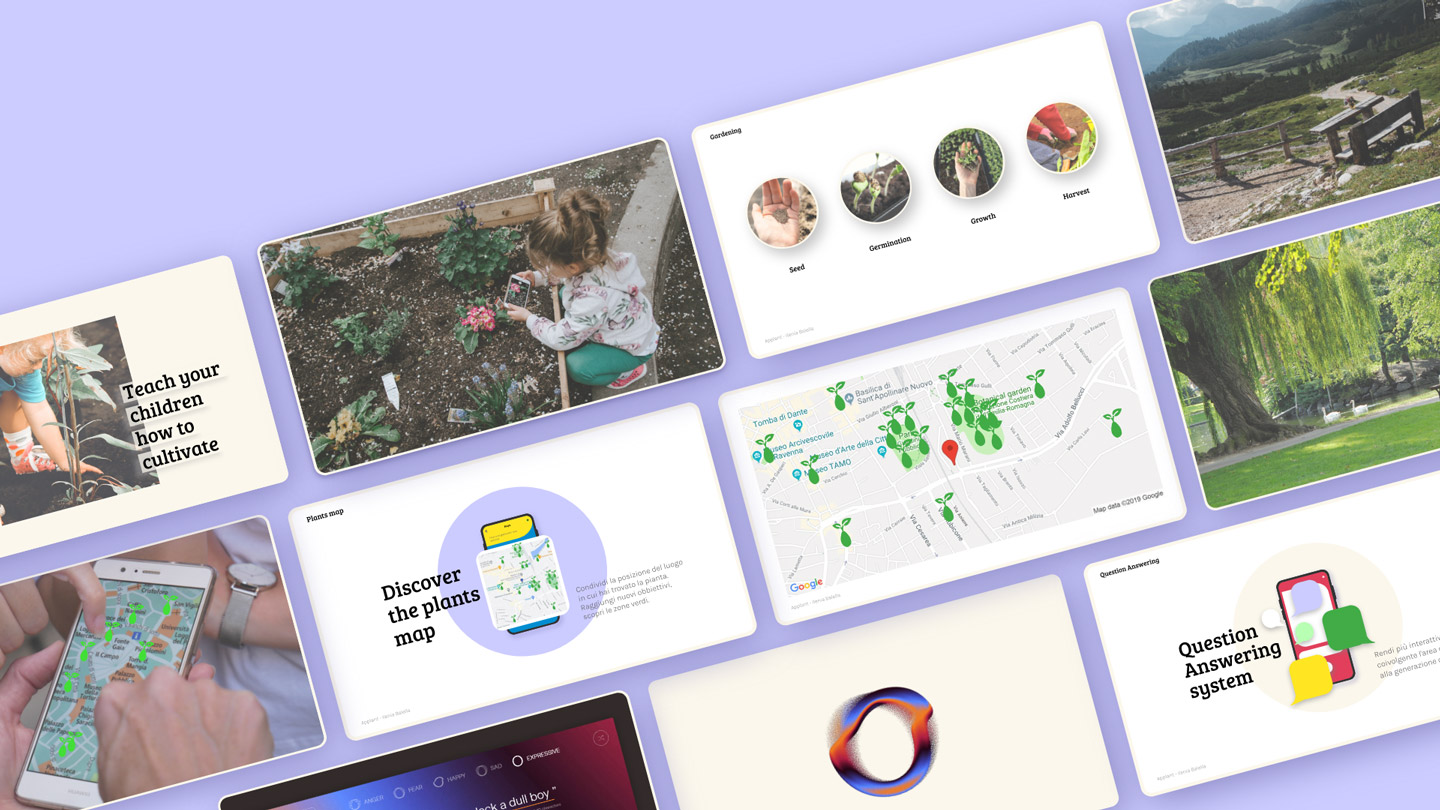

Interaction design & machine learning

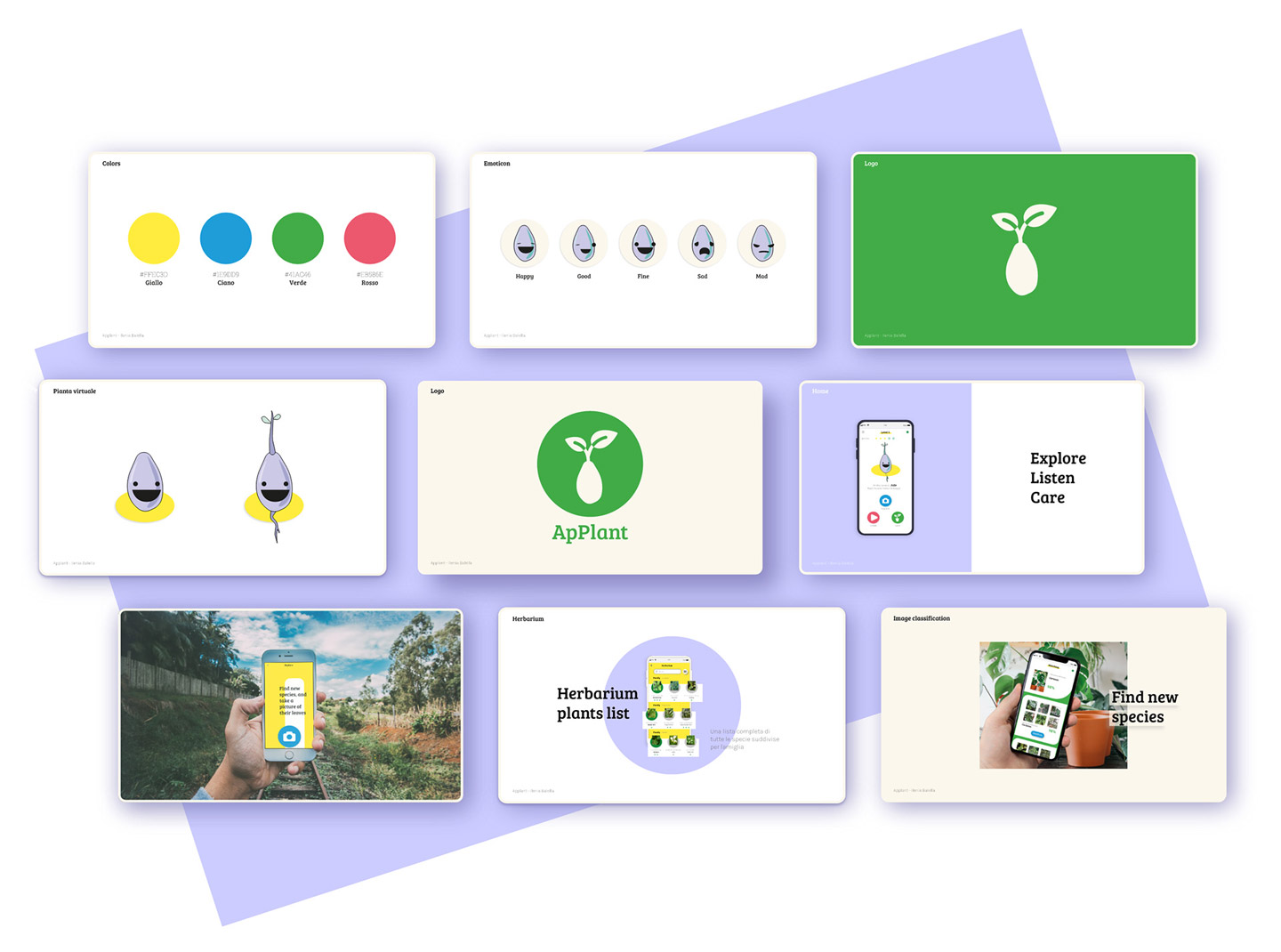

ApPlant

master degree’s thesis | 110 cum laude / 110

This thesis aims to investigate the communicative potential of artificial intelligence, with particular regard to the possibility of generating awareness toward climate change.

objective

The human being is able to understand and communicate through verbal and non-verbal language, using filters that guides our choices, such as emotions. Notably, the individual's ability to understand others' emotions, known as empathy, affects and shapes our behavior. However, human beings need comprehensible stimuli to enter directly into 'affective empathy' with others (such as expressions, body language, vocal cues, semantics). Therefore, in order to create an emotional bond between men and plants, it may be necessary to provide the latter with a language akin to the human one. So, if plants could be equipped with words and feelings, what would they be able to express? What would they think about climate change?

WHAT IS

This project seeks to address these questions through the ability of machine learning of processing natural language and recognizing images. By means of a strategic coupling between what's virtual and what's real, climate-change awareness and respectful behaviors toward the environment are encouraged.

To this end, we propose a digital-assistance approach, matched with a smartphone. Therefore, the project idea consists of an app that assists the user in taking care of the plants, and at the same time generates an empathic dialogue with the plants themselves, which may consequently bring about in the user deeper considerations about environmental issues.

TECHNICAL PROCESSES

Two types of algorithms were used:

Natural language processing is capable of generating an environmentally themed story, and an image recognition model is used to identify the species a plant belongs to.

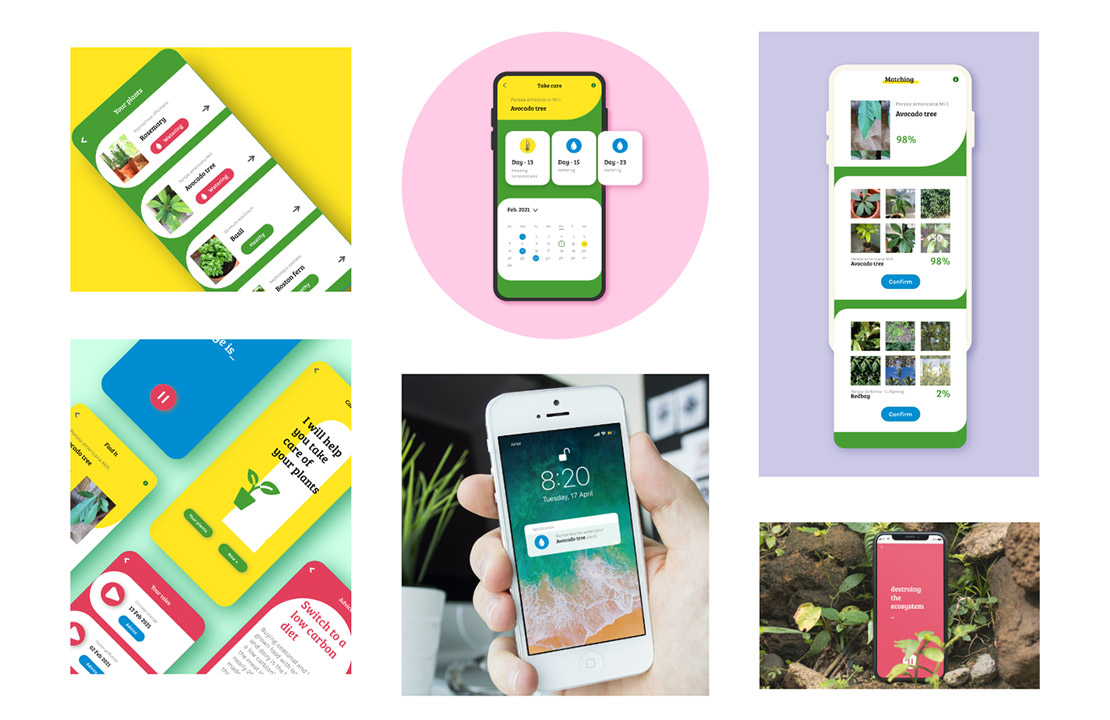

I structured the project into three paths, using gamification mechanisms, to design a virtual plant that will undergo the six stages of growth, from seed to fruit. The virtual plant will be able to grow thanks to the points collected by the user, whose task is to take as many pictures as possible of plant species. The points collected by the user will also affect the virtual plant's mood, which will be highlighted by facial expressions and the semantics related to the NLP-generated text.

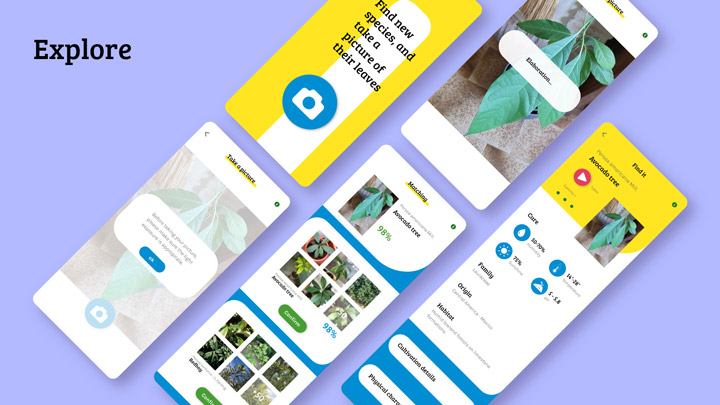

Explore

In the Explore section, the user uploads his pics, which are analyzed by the algorithm. Once the identification process is over and the prediction is confirmed, some additional information about the species will be displayed.

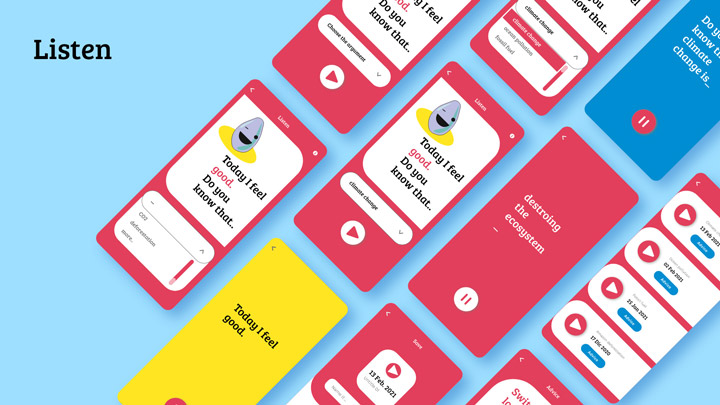

Listen

In the Listen section, it will be possible to listen to short stories, thanks to an NLP algorithm trained on reports dealing with climate change issues. The semantics of the text is followed by the background color, to add further expressiveness to the message.

Care

In the Care section, the system will set up a calendar with reminders, and alert the user with notifications, indicating, for example when watering his plants.

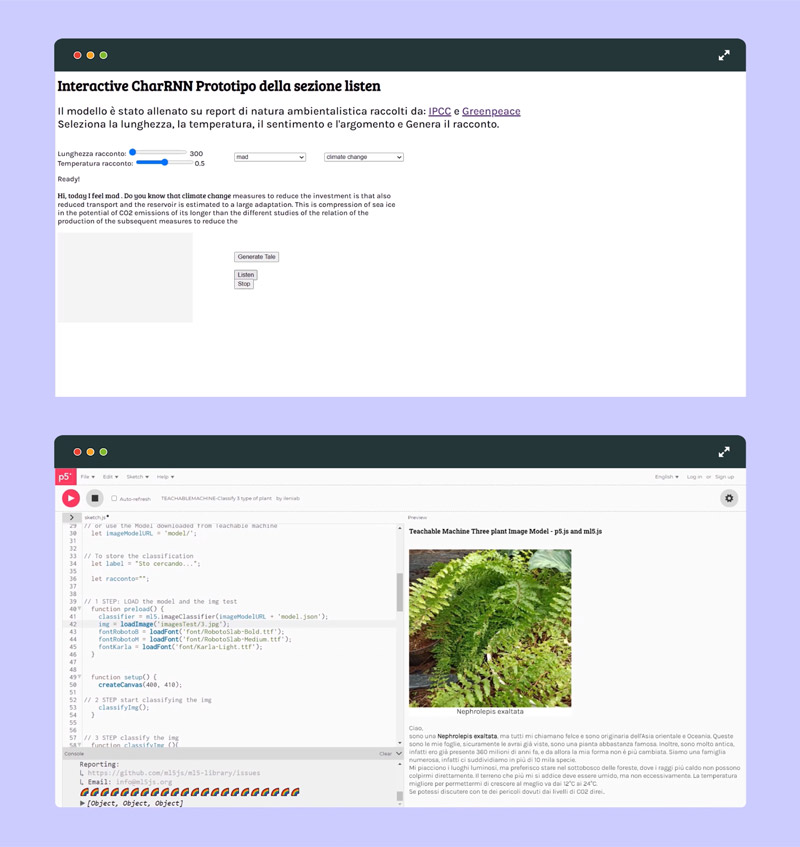

Prototype

Throughout the designing process, I experimented with technology by developing two working prototypes: the first one generates text strings with CharRNN (trained on reports dealing with climate change issues), whereas the second is able to recognize the taxonomic category to which the plant belongs, by employing p5.js (trained with an opensource database of images categorized by plant species).

It was an interesting and stimulating experience, especially because these technologies offer an infinite number of possibilities to be exploited to create new ways of interacting and new tools that accompany and assist us in our daily lives, helping us to improve our habits towards more conscious choices, in my case towards the environment.